Abstract

The recent advancements in deep learning have revolutionized the field of machine learning, enabling unparalleled performance and many new real-world applications. Yet, the developments that led to this success have often been driven by empirical studies, and little is known about the theory behind some of the most successful approaches. While theoretically well-founded deep learning architectures had been proposed in the past, they came at a price of increased complexity and reduced tractability. Recently, we have witnessed considerable interest in principled deep learning. This led to a better theoretical understanding of existing architectures as well as development of more mature deep models with solid theoretical foundations. In this workshop, we intend to review the state of those developments and provide a platform for the exchange of ideas between the theoreticians and the practitioners of the growing deep learning community. Through a series of invited talks by the experts in the field, contributed presentations, and an interactive panel discussion, the workshop will cover recent theoretical developments, provide an overview of promising and mature architectures, highlight their challenges and unique benefits, and present the most exciting recent results.

Topics of interest include, but are not limited to:

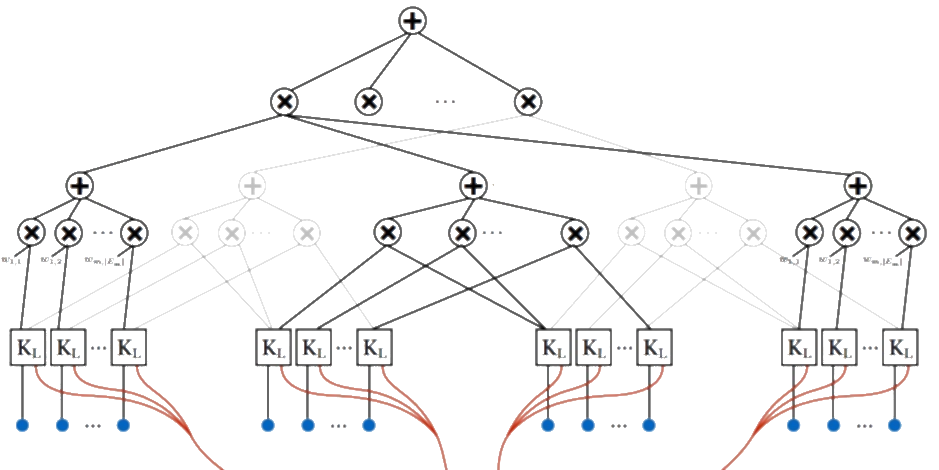

- Deep architectures with solid theoretical foundations

- Theoretical understanding of deep networks

- Theoretical approaches to representation learning

- Algorithmic and optimization challenges, alternatives to backpropagation

- Probabilistic, generative deep models

- Symmetry, transformations, and equivariance

- Practical implementations of principled deep learning approaches

- Domain-specific challenges of principled deep learning approaches

- Applications to real-world problems